How Ethernet Will Win in the AI Networking Battle

The AI Boom Meets Network Demands

With artificial intelligence (AI) rapidly transforming industries—from healthcare and finance to autonomous vehicles and robotics—the need for ultra-fast, scalable, and efficient data networking has never been greater. The rise of large language models (LLMs), generative AI, and real-time data analytics is pushing traditional network infrastructures to their limits.

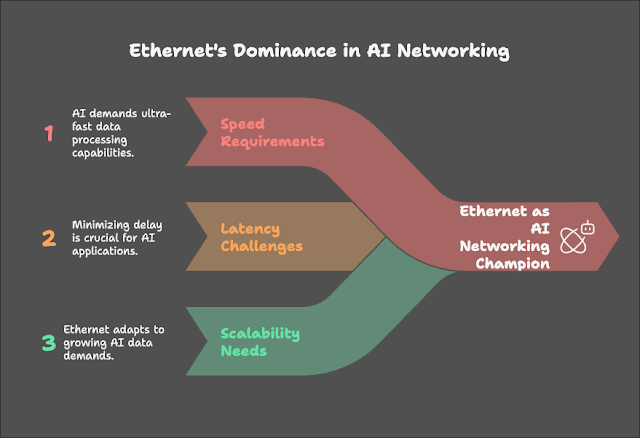

In this high-stakes game of speed, latency, and scalability—Ethernet is emerging as the undisputed champion.

What Is the AI Networking Battle?

AI workloads are uniquely data-intensive. Training large models like ChatGPT, GPT-4, or Google Gemini requires vast amounts of data to move rapidly between GPUs, CPUs, storage, and data centers. This has triggered a networking arms race.

Several technologies are competing for dominance in this space:

InfiniBand: Popular in high-performance computing (HPC) due to its low-latency and high-throughput design.

Proprietary Interconnects: Like NVIDIA’s NVLink or Google's TPU mesh network.

Ethernet: The tried-and-tested backbone of enterprise networking.

The battle is to determine which technology can deliver the best performance, scalability, cost-efficiency, and flexibility to support the future of AI.

Why Ethernet Is Poised to Win

1. Massive Ecosystem and Vendor Neutrality

Ethernet is open, standardized, and supported by a broad range of vendors including Arista Networks, Cisco, Broadcom, Marvell, and others. Unlike InfiniBand, which is largely dominated by NVIDIA through its Mellanox acquisition, Ethernet’s openness allows innovation and cost competition.

According to Arista CEO Jayshree Ullal, the company is betting heavily on Ethernet for AI networks because it offers vendor neutrality and long-term scalability. This openness reduces vendor lock-in, which is a key concern for enterprises.

"AI is the new cloud. And the new cloud is built on Ethernet," says Ullal in her recent Investor’s Business Daily interview.

2. Scalability to 800G and Beyond

Ethernet standards are evolving quickly. We are already seeing:

400G Ethernet deployments

800G and 1.6T Ethernet on the horizon

These advances enable horizontal scaling of GPU clusters, AI supercomputers, and training data centers without the complexity of proprietary fabrics.

Ethernet is also benefitting from Silicon Photonics and PAM4 modulation, making high-bandwidth networking more power-efficient and cost-effective.

3. Better Economics

Ethernet is cheaper—both in terms of equipment cost and operational expense. InfiniBand switches and interconnects are expensive, both to purchase and to manage. Ethernet, by contrast, leverages commodity hardware and widespread talent in the workforce.

InfiniBand: Great for tightly-coupled HPC systems.

Ethernet: Ideal for large-scale, distributed AI/ML environments.

As AI workloads become more cloud-native and span multiple data centers, Ethernet’s simplicity and cost-efficiency become massive advantages.

4. AI Workload Optimization with Ethernet Fabric

Next-gen Ethernet switches and software now support RDMA over Converged Ethernet (RoCE), load balancing, congestion control, and smart telemetry, allowing Ethernet to match or exceed InfiniBand’s performance in many scenarios.

With vendors like Arista, Cisco, and Broadcom collaborating on high-performance AI Ethernet fabrics, the performance gap has nearly closed—while the flexibility remains unmatched.

The Vision: Ethernet as the AI Backbone

Unified Fabric for All Workloads

Future data centers will not separate AI from other workloads. Ethernet provides a unified fabric for storage, compute, video, AI, and real-time analytics—simplifying management and reducing overhead.

Cloud-Scale AI Over Ethernet

AI workloads are growing beyond single clusters and single sites. They are spanning multiple clouds, hybrid environments, and edge locations.

Ethernet is inherently designed for:

Multi-tenant isolation

Secure segmentation

Interoperability across clouds

This makes it ideal for cloud-scale AI architectures where flexibility is key.

What the Industry Leaders Are Saying

"We're building Ethernet-based AI clusters for multiple hyperscalers," says Jayshree Ullal of Arista.

"The flexibility, economics, and power efficiency of Ethernet are hard to beat."

Companies like Microsoft Azure, Meta, Google Cloud, and even parts of AWS are all experimenting or moving to Ethernet for AI networking needs.

This is a turning point. Proprietary fabrics may still dominate niche HPC spaces, but AI at enterprise and hyperscale levels is shifting to Ethernet.

Conclusion: Ethernet Is the Future of AI Networking

The AI revolution is reshaping infrastructure as we know it. In this fast-evolving landscape, Ethernet is not just keeping up—it’s taking the lead by offering:

Open standards and vendor neutrality

Scalable bandwidth up to 1.6 Tbps

Reduced costs and greater flexibility

Enterprise-wide and cloud-native compatibility

With industry giants like Arista, Cisco, Broadcom, and the cloud titans all backing Ethernet, the writing is on the wall.

Ethernet will win the AI networking battle— not by brute force, but by being smarter, more open, and infinitely more adaptable.

.png)

.png)

.png)

.png)

.png)

Ethernet's scalability and low latency make it a strong contender in AI networking. Pairing it with reliable Firewall Network Security Dubai ensures high-performance data flow while maintaining robust security across intelligent network infrastructures.

ReplyDeletecyber security for beginners needs relatable examples, and Where U Elevate provides those insights consistently.

ReplyDeleteNG Networks offers data networking courses in Delhi, providing an excellent foundation in networking. Learn the latest technologies and practical skills necessary for a successful career in IT. Our curriculum includes IP addressing, routing, switching, and network security. Become an expert with our data networking course today!

ReplyDelete